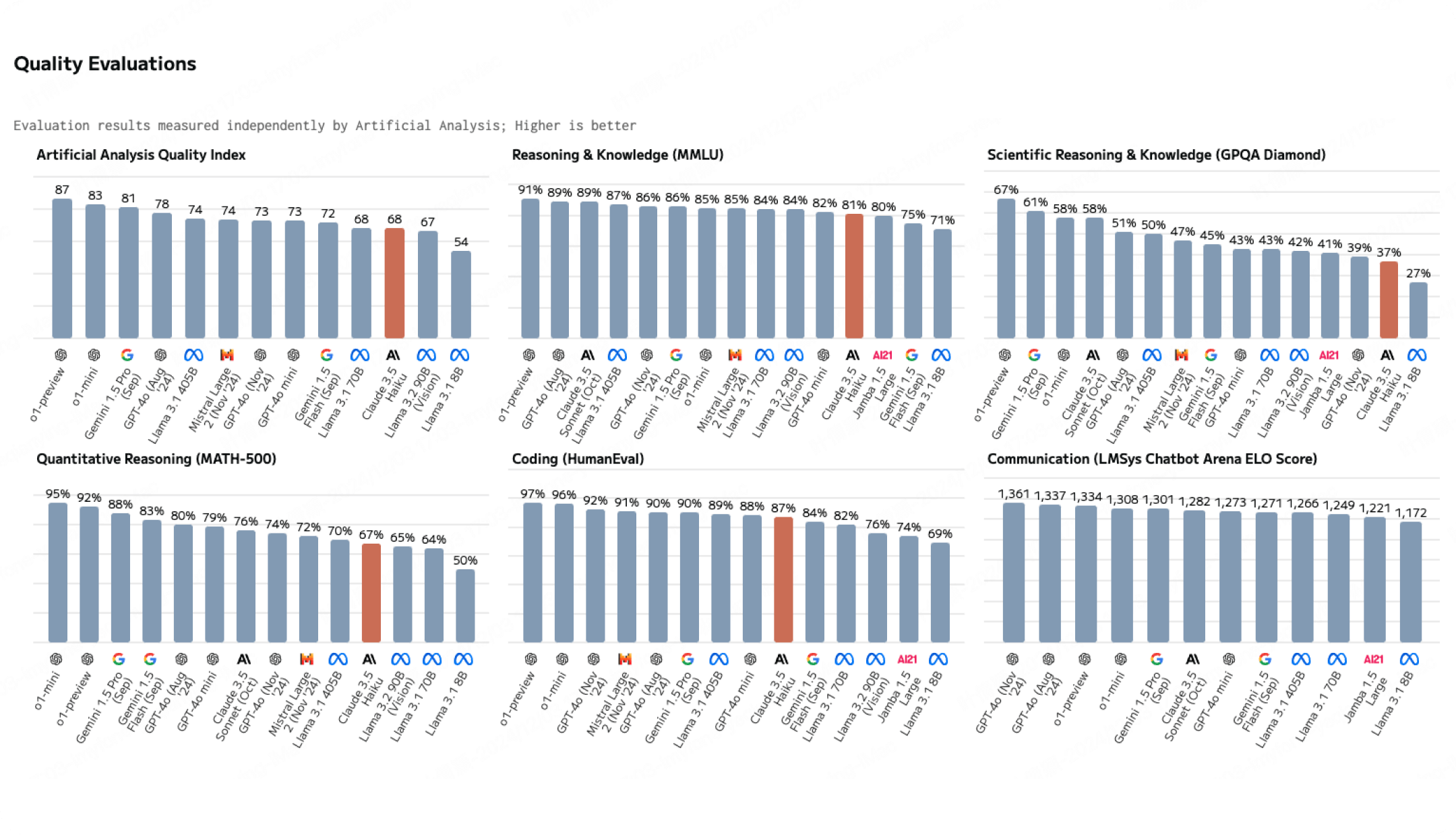

Open AI

o1-preview

85 Score

the Quality of o1-preview Index across evaluations of 85.

With a output speed of 36.4 tokens per second.

With a context window of 130k tokens.

Open AI

GPT-4o mini

71 Score

With a MMLU score of 0.82 and a Quality Index across evaluations of 71.

With a output speed of 99.8 tokens per second.

With a context window of 130k tokens.

Open AI

GPT-4o

77 Score

With a MMLU score of 0.887 and a Quality Index across evaluations of 77.

With a output speed of 86.6 tokens per second.

With a context window of 130k tokens.

Open AI

GPT-4 Turbo

74 Score

With a MMLU score of 0.864 and a Quality Index across evaluations of 74.

GPT-4 Turbo is slower compared to average, with a output speed of 37.3 tokens per second.

GPT-4 Turbo has a smaller context windows than average, with a context window of 130k tokens.

Open AI

GPT-4

71 Score

With a MMLU score of 0.82 and a Quality Index across evaluations of 71.

With a output speed of 23.8 tokens per second.

With a context window of 8.2k tokens.

Open AI

GPT-3.5 Turbo

52 Score

GPT-3.5 Turbo is of lower quality compared to average, with a MMLU score of 0.7 and a Quality Index across evaluations of 52.

GPT-3.5 Turbo is faster compared to average, with a output speed of 102.8 tokens per second.

compared to average, with a output speed of 102.8 tokens per second. GPT-3.5 Turbo has a smaller context windows than average, with a context window of 16k tokens.

Anthropic

Claude 3.5 Sonnet

80 Score

With Quality Index across evaluations of 80.

With a output speed of 55.6 tokens per second.

With a context window of 200k tokens.

Anthropic

Claude 3.5 Haiku

69 Score

With Quality Index across evaluations of 69.

With a output speed of 63.2 tokens per second.

With a context window of 200k tokens.

Anthropic

Claude 3 Opus

70 Score

With a MMLU score of 0.868 and a Quality Index across evaluations of 70.

With a output speed of 27.2 tokens per second.

With a context window of 200k tokens.

Anthropic

Claude 3 Haiku

54 Score

With a MMLU score of 0.752 and a Quality Index across evaluations of 54.

With a output speed of 127.6 tokens per second.

With a context window of 200k tokens.

Google

Gemini 1.5 Pro

80 Score

With Quality Index across evaluations of 80.

With a output speed of 59.1 tokens per second.

With a context window of 2.0M tokens.

Google

Gemini 1.5 Flash

73 Score

With Quality Index across evaluations of 73.

With a output speed of 190.4 tokens per second.

With a context window of 1.0M tokens.

Google

Gemini 1.0 Pro

60 Score

With a price of $0.75 per 1M Tokens (blended 3:1).

With a output speed of 102.1 tokens per second.

With a context window of 33k tokens.

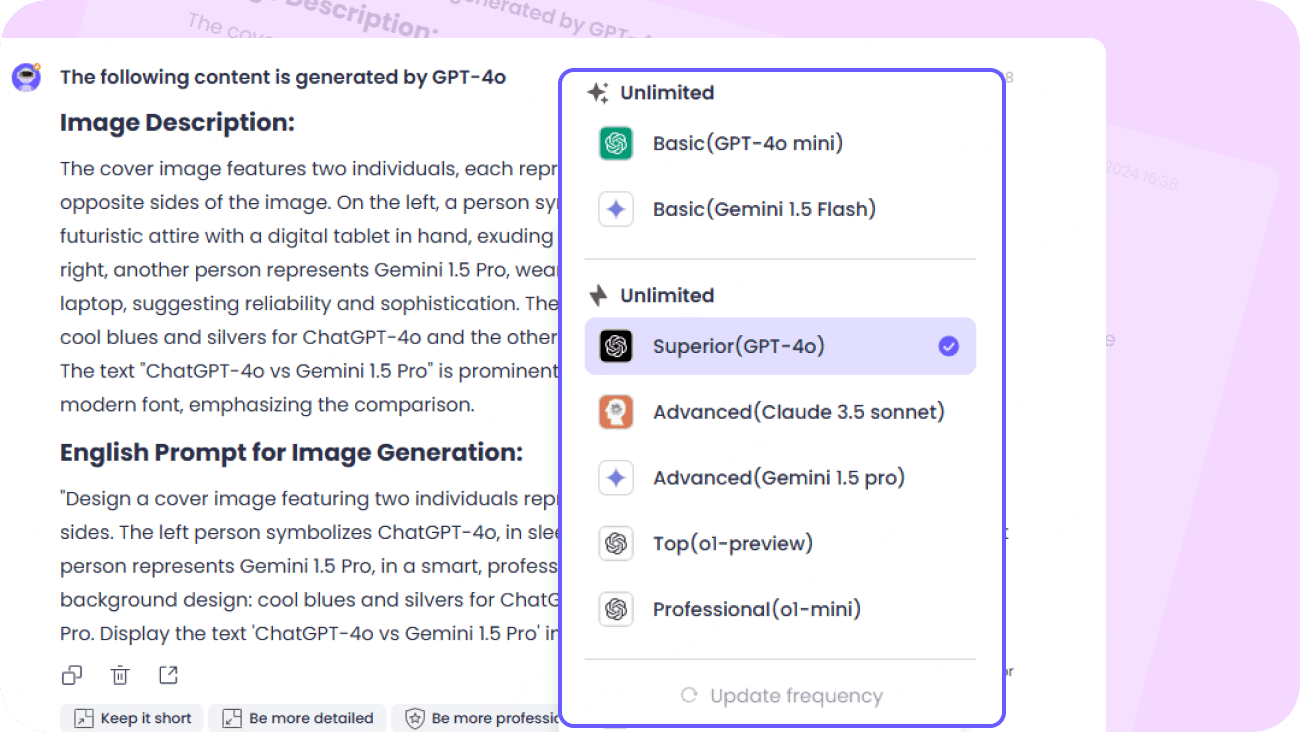

AI Writer

AI Writer

AI Image

AI Image

AI Chat

AI Chat

Email Writer

Email Writer

Novel Writer

Novel Writer

DeepSeek R1&V3

DeepSeek R1&V3

GPT-4o & o3-mini

GPT-4o & o3-mini

Claude 3.7 sonnet

Claude 3.7 sonnet

Gemini 2.0 Pro

Gemini 2.0 Pro

GPT-4o mini

GPT-4o mini